Re: [Starcluster] Multiple MPI jobs on SunGrid Engine with StarCluster

- Contemporary messages sorted: [ by date ] [ by thread ] [ by subject ] [ by author ]

From: Damian Eads <no email>

Date: Mon, 21 Jun 2010 17:14:52 -0700

Hi Justin,

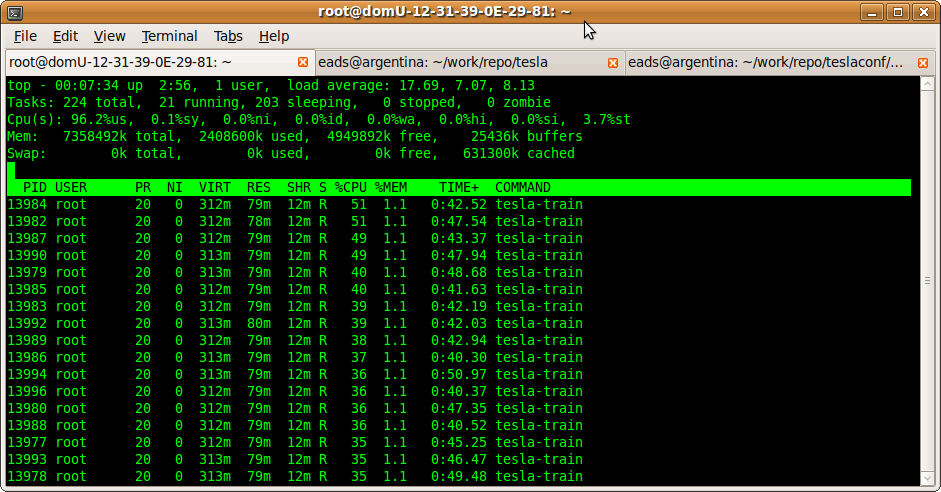

Please see the attached image. It shows the output of top when I try

to start a 20 process OpenMPI job using qsub. Notice that it doesn't

spread the processes across the cluster.

root_at_domU-12-31-39-0E-29-81:/data/tesla# start-large.sh birds-1.cfg

root_at_domU-12-31-39-0E-29-81:/data/tesla# cat

../teslaconf/scripts/start-large.sh

#!/bin/bash

NP=20

for i in $*

do

JOBNAME=`echo $i | sed 's/\.cfg//g' | sed 's/^.*\///g'`

OUTFILE=/tmp/az-${JOBNAME}.out

ERRFILE=/tmp/az-${JOBNAME}.err

export PATH=/data/teslaconf/scripts:/data/tesla:$PATH

CMD="qsub -N ${JOBNAME} -pe orte ${NP} -o ${OUTFILE} -e ${ERRFILE}

-wd /data/tesla -V ../teslaconf/scripts/tesla-train.sh ${NP} $i"

echo Running $CMD

$CMD

done

root_at_domU-12-31-39-0E-29-81:/data/tesla# cat

../teslaconf/scripts/tesla-train.sh

#!/bin/bash

PYTHON_VERSION=python2.6

PYTHONPATH=/data/prefix/python2.6/site-packages

mpirun -hostfile /data/teslaconf/machines -wd /data/tesla \

-n $1 -x PYTHONPATH=/data/prefix/lib/python2.6/site-packages

./tesla-train --config $2

Ideas?

Thanks,

Damian

On Mon, Jun 21, 2010 at 10:02 AM, Damian Eads <eads_at_soe.ucsc.edu> wrote:

> Hi Justin,

>

> Thank you for your explanation on MPI/SGE integration. A few quick questions:

>

> On Mon, Jun 21, 2010 at 9:46 AM, Justin Riley <jtriley_at_mit.edu> wrote:

>> So the above explains the parallel environment setup within SGE. It

>> turns out that if you're using a parallel environment with OpenMPI, you

>> do not have to specify --byslot/--bynodes/-np/-host/etc options to

>> mpirun given that SGE will handle the round_robin/fill_up modes for you

>> and automatically assign hosts and number of processors to be used by

>> OpenMPI.

>>

>> So, for your use case I would change the commands as follows:

>> - -----------------------------------------------------------------

>> qsub -pe orte 24 ./myjobscript.sh experiment-1

>> qsub -pe orte 24 ./myjobscript.sh experiment-2

>> qsub -pe orte 24 ./myjobscript.sh experiment-3

>> ...

>> qsub -pe orte 24 ./myjobscript.sh experiment-100

>>

>> where ./myjobscript.sh calls mpirun as follows

>>

>> mpirun -x PYTHONPATH=/data/prefix/lib/python2.6/site-packages

>> -wd /data/experiments ./myprogram $1

>

> So I don't need to specify the number of slots to use in mpirun? The

> Sun GridEngine will somehow pass this information to mpirun? Is the

> mpirun argument -n 1 by default?

>

>> - -----------------------------------------------------------------

>> NOTE: You can also pass -wd to the qsub command instead of mpirun and

>> along the same lines I believe you can pass -v option to qsub rather

>> than -x to mpirun. Neither of these should make a difference, just

>> shifts where the -x/-wd concern is (from MPI to SGE).

>

> Good idea. This will clean things up a bit. Thanks for suggesting it.

>

>> I will add a section to the docs about using SGE/OpenMPI integration on

>> StarCluster based on this email.

>>

>>> Perhaps if carefully

>>> used, this will ensure that there is a root MPI process running on the

>>> master node for every MPI job that's simultaneously running.

>>

>> Is this a requirement for you to have a root MPI process on the master

>> node for every MPI job? If you're worried about oversubscribing the

>> master node with MPI processes, then this SGE/OpenMPI integration should

>> relieve those concerns. If not, what's the reason for needing a 'root

>> MPI process' running on the master node for every MPI job?

>

> It's not a requirement but reflects some ignorance on my part. <aybe

> I'm confused about why the first node is called master. I was assuming

> it had that name because it was performing some kind of special

> coordination.

>

> Do I still need to provide the -hostfile option? Or is this automatic now?

>

> Thanks a lot!

>

> Damian

>

> -----------------------------------------------------

> Damian Eads Ph.D. Candidate

> University of California Computer Science

> 1156 High Street Machine Learning Lab, E2-489

> Santa Cruz, CA 95064 http://www.soe.ucsc.edu/~eads

>

Received on Mon Jun 21 2010 - 20:14:53 EDT

Date: Mon, 21 Jun 2010 17:14:52 -0700

Hi Justin,

Please see the attached image. It shows the output of top when I try

to start a 20 process OpenMPI job using qsub. Notice that it doesn't

spread the processes across the cluster.

root_at_domU-12-31-39-0E-29-81:/data/tesla# start-large.sh birds-1.cfg

root_at_domU-12-31-39-0E-29-81:/data/tesla# cat

../teslaconf/scripts/start-large.sh

#!/bin/bash

NP=20

for i in $*

do

JOBNAME=`echo $i | sed 's/\.cfg//g' | sed 's/^.*\///g'`

OUTFILE=/tmp/az-${JOBNAME}.out

ERRFILE=/tmp/az-${JOBNAME}.err

export PATH=/data/teslaconf/scripts:/data/tesla:$PATH

CMD="qsub -N ${JOBNAME} -pe orte ${NP} -o ${OUTFILE} -e ${ERRFILE}

-wd /data/tesla -V ../teslaconf/scripts/tesla-train.sh ${NP} $i"

echo Running $CMD

$CMD

done

root_at_domU-12-31-39-0E-29-81:/data/tesla# cat

../teslaconf/scripts/tesla-train.sh

#!/bin/bash

PYTHON_VERSION=python2.6

PYTHONPATH=/data/prefix/python2.6/site-packages

mpirun -hostfile /data/teslaconf/machines -wd /data/tesla \

-n $1 -x PYTHONPATH=/data/prefix/lib/python2.6/site-packages

./tesla-train --config $2

Ideas?

Thanks,

Damian

On Mon, Jun 21, 2010 at 10:02 AM, Damian Eads <eads_at_soe.ucsc.edu> wrote:

> Hi Justin,

>

> Thank you for your explanation on MPI/SGE integration. A few quick questions:

>

> On Mon, Jun 21, 2010 at 9:46 AM, Justin Riley <jtriley_at_mit.edu> wrote:

>> So the above explains the parallel environment setup within SGE. It

>> turns out that if you're using a parallel environment with OpenMPI, you

>> do not have to specify --byslot/--bynodes/-np/-host/etc options to

>> mpirun given that SGE will handle the round_robin/fill_up modes for you

>> and automatically assign hosts and number of processors to be used by

>> OpenMPI.

>>

>> So, for your use case I would change the commands as follows:

>> - -----------------------------------------------------------------

>> qsub -pe orte 24 ./myjobscript.sh experiment-1

>> qsub -pe orte 24 ./myjobscript.sh experiment-2

>> qsub -pe orte 24 ./myjobscript.sh experiment-3

>> ...

>> qsub -pe orte 24 ./myjobscript.sh experiment-100

>>

>> where ./myjobscript.sh calls mpirun as follows

>>

>> mpirun -x PYTHONPATH=/data/prefix/lib/python2.6/site-packages

>> -wd /data/experiments ./myprogram $1

>

> So I don't need to specify the number of slots to use in mpirun? The

> Sun GridEngine will somehow pass this information to mpirun? Is the

> mpirun argument -n 1 by default?

>

>> - -----------------------------------------------------------------

>> NOTE: You can also pass -wd to the qsub command instead of mpirun and

>> along the same lines I believe you can pass -v option to qsub rather

>> than -x to mpirun. Neither of these should make a difference, just

>> shifts where the -x/-wd concern is (from MPI to SGE).

>

> Good idea. This will clean things up a bit. Thanks for suggesting it.

>

>> I will add a section to the docs about using SGE/OpenMPI integration on

>> StarCluster based on this email.

>>

>>> Perhaps if carefully

>>> used, this will ensure that there is a root MPI process running on the

>>> master node for every MPI job that's simultaneously running.

>>

>> Is this a requirement for you to have a root MPI process on the master

>> node for every MPI job? If you're worried about oversubscribing the

>> master node with MPI processes, then this SGE/OpenMPI integration should

>> relieve those concerns. If not, what's the reason for needing a 'root

>> MPI process' running on the master node for every MPI job?

>

> It's not a requirement but reflects some ignorance on my part. <aybe

> I'm confused about why the first node is called master. I was assuming

> it had that name because it was performing some kind of special

> coordination.

>

> Do I still need to provide the -hostfile option? Or is this automatic now?

>

> Thanks a lot!

>

> Damian

>

> -----------------------------------------------------

> Damian Eads Ph.D. Candidate

> University of California Computer Science

> 1156 High Street Machine Learning Lab, E2-489

> Santa Cruz, CA 95064 http://www.soe.ucsc.edu/~eads

>

-- ----------------------------------------------------- Damian Eads Ph.D. Candidate University of California Computer Science 1156 High Street Machine Learning Lab, E2-489 Santa Cruz, CA 95064 http://www.soe.ucsc.edu/~eads

(image/png attachment: problem.png)